Use AI as a Reverse Search Engine

My previous article was all about how AI can’t be trusted. Maybe you think this means I’m a luddite, raging against the new technology of the day.

That’s pretty far from the truth. If anything, being at the cutting edge of technology gives me a pretty good view of what new developments are and are not good at. I maintain that AI chatbots are, as of right now, somewhat questionable at giving you reliable answers to questions, or explanations of topics you’re not already familiar with, particularly in niche fields or in areas where the details matter. You can use them for this if you want, but be careful and double-check the answers, which usually means going to a search engine to verify whatever it tells you.

But AI chatbots have one major strength: you can put a lot of words into them, and they’ll generally understand those words.

This is something that’s unprecedented in the history of computing, and it lets you do the opposite of what you do with a search engine. Instead of giving it a term and asking it to explain it, give it an explanation of what you want, and ask it to suggest you the terms to look into.

The problem with search

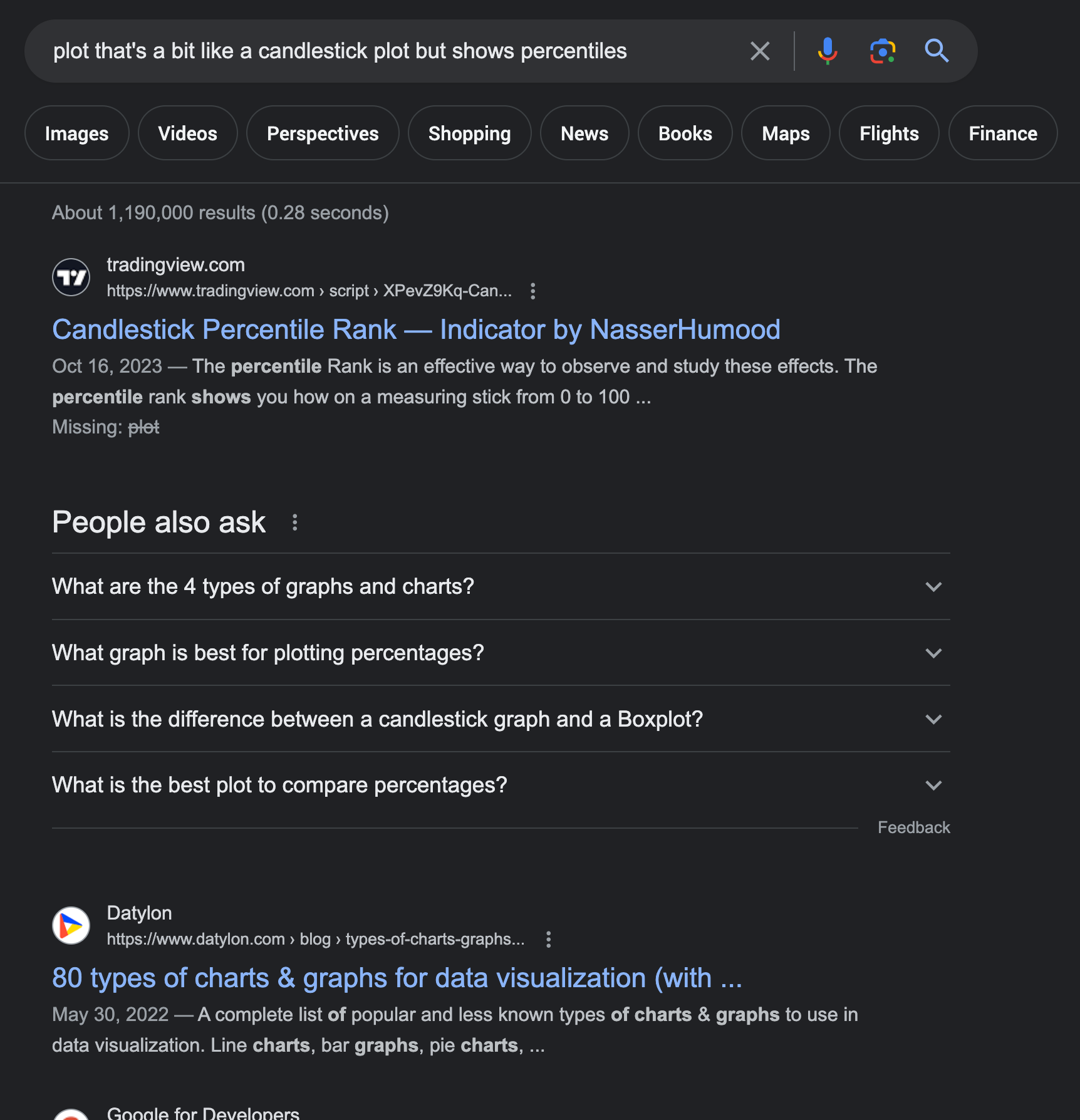

Search engines have a problem: you need to know what to search for, and you might not know the right terms or jargon. Sometimes you can kind of type a description into the search bar and come up with an answer, but this doesn’t always work.

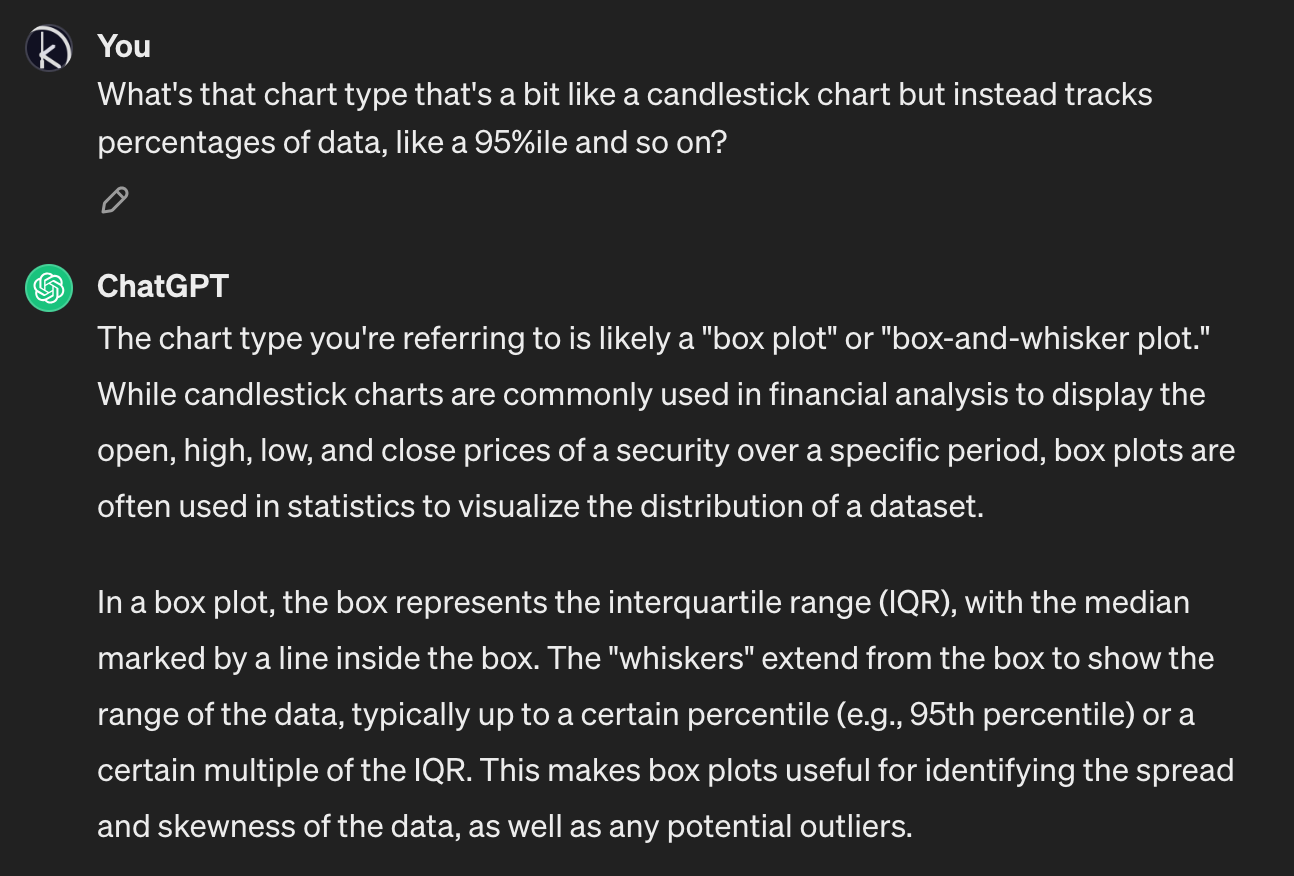

I’d forgotten the name of this type of chart, and didn’t really know what to search for to get the right answer.

This isn’t a problem with AI chatbots: you can give them a whole paragraph of text describing what you want, and it’ll easily process it and give you an answer.

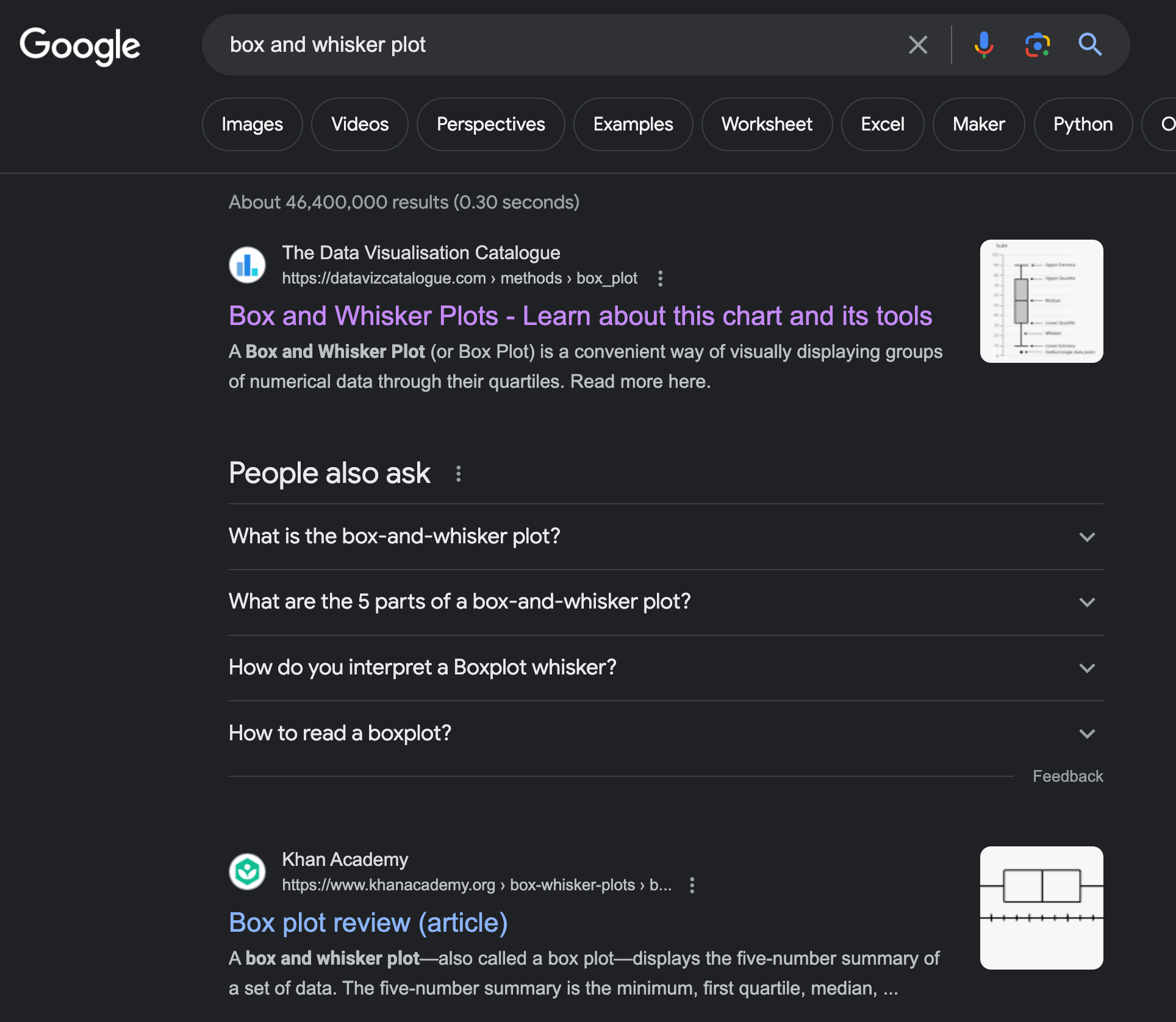

This gives you a jumping-off point for your own research. You can take that back to a search engine in order to verify the answer is what you want.

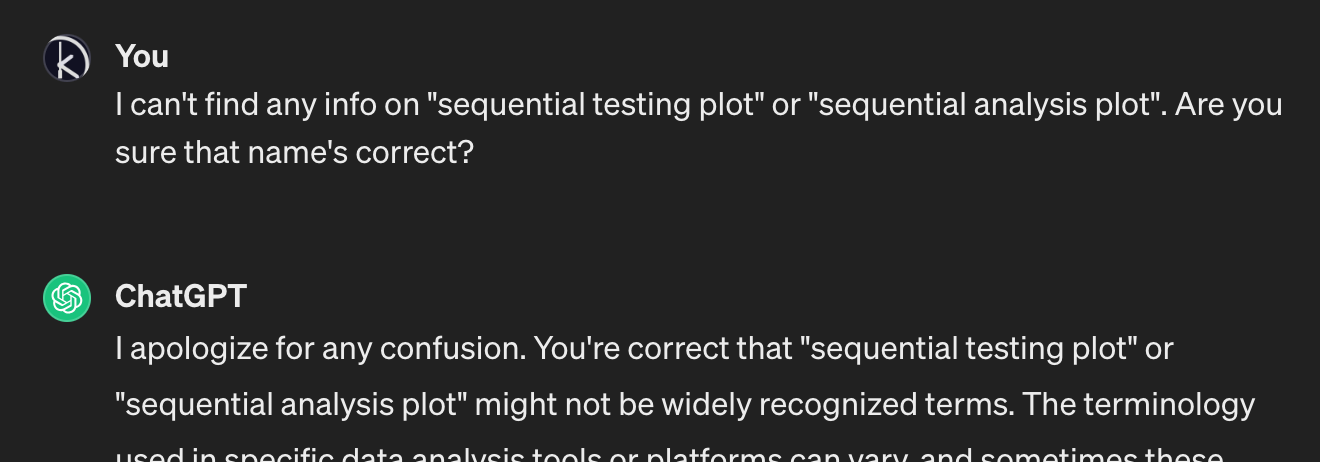

It still is important to verify the answer it gives you, and do so in an actual search engine. Sometimes it still makes things up. You don’t want to be like that one lawyer.

To be clear, I did this after searching for these terms on Google (and finding nothing). I just wanted to see if the bot would double down on its hallucination. Don’t trust the bot to tell you whether something’s real or not.

Some more examples

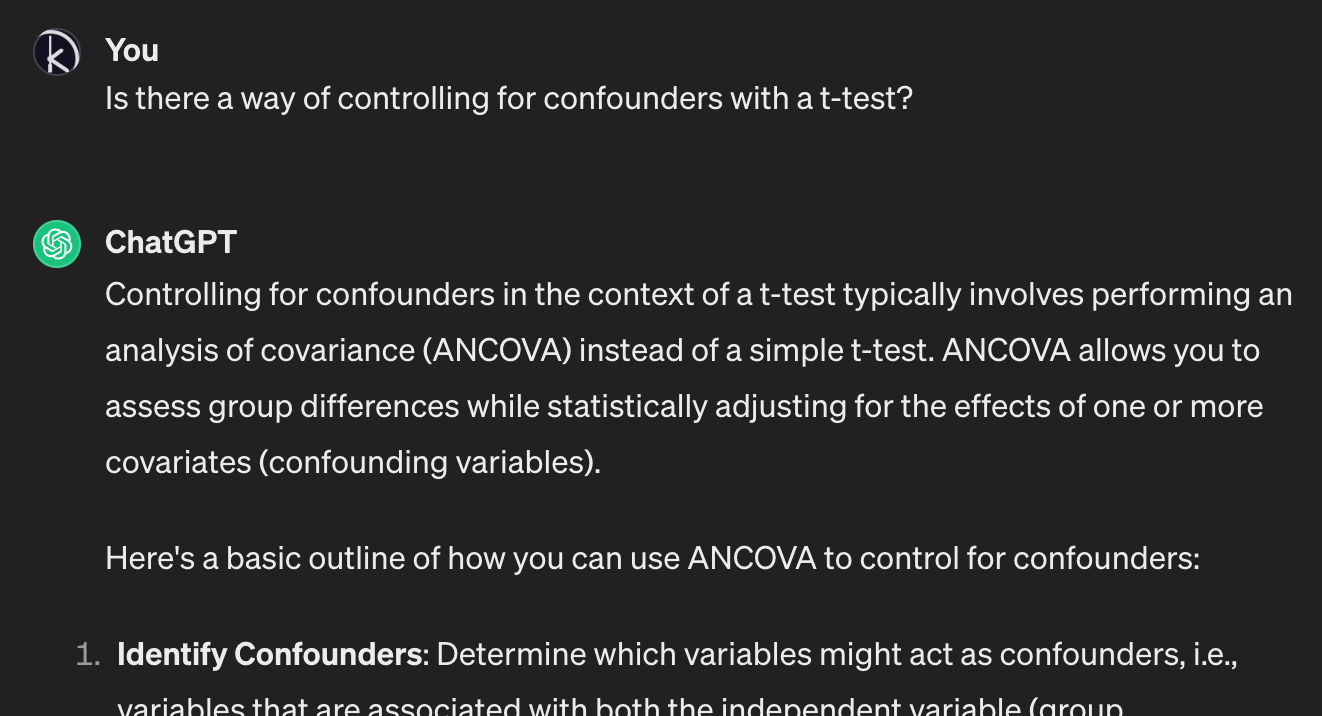

Statistics

This is especially useful when diving into a well-established field that you’re new to and don’t know the jargon for. I had to do some statistical analysis for a personal project recently, and here’s how I approached it:

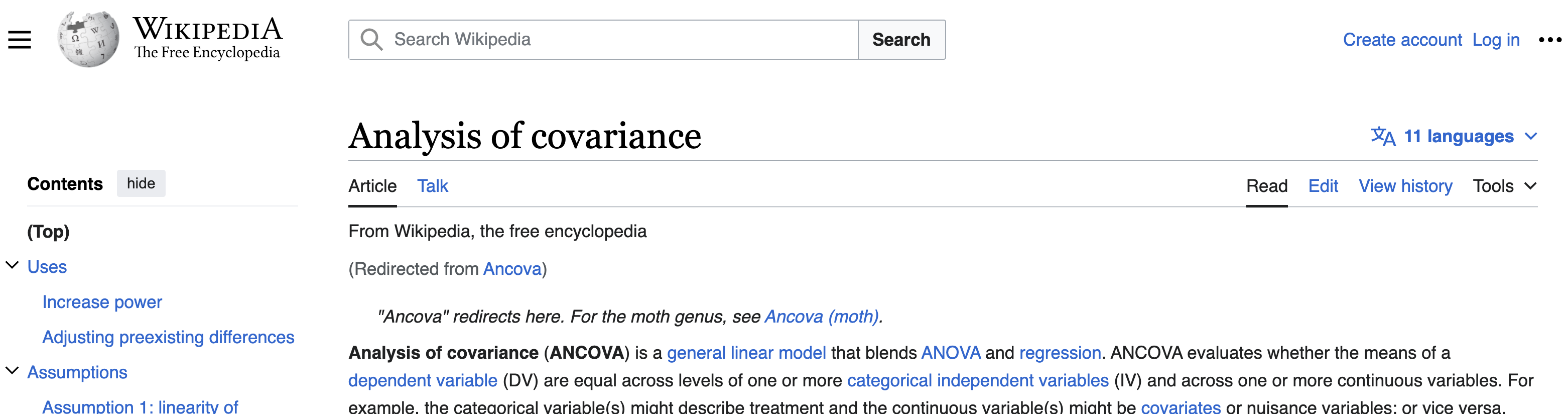

And of course, I didn’t take the AI at its word, but verified that this was actually what I wanted.

After more questioning, the AI pointed me to a specific funciton in the statsmodels Python library which I could use to easily run an ANCOVA test. I verified this in statsmodels’s documentation.

The value here is not necessarily in getting the AI to do the analysis for me, but in immediately pinpointing a specific starting point and telling me what to search for. I didn’t know ANCOVA or this library existed before starting the project, and just having them as a starting point likely shaved off an hour or more of confused searching.

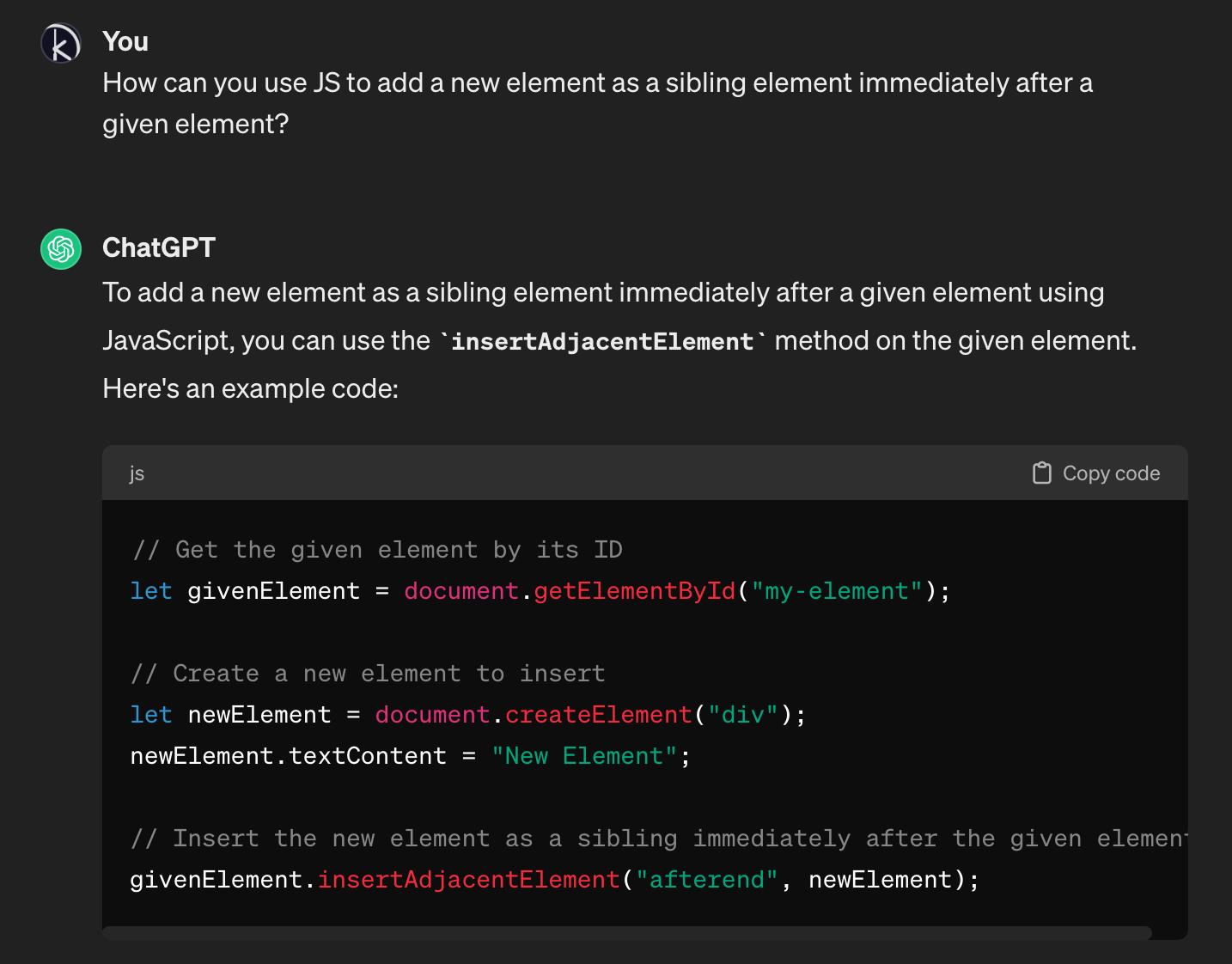

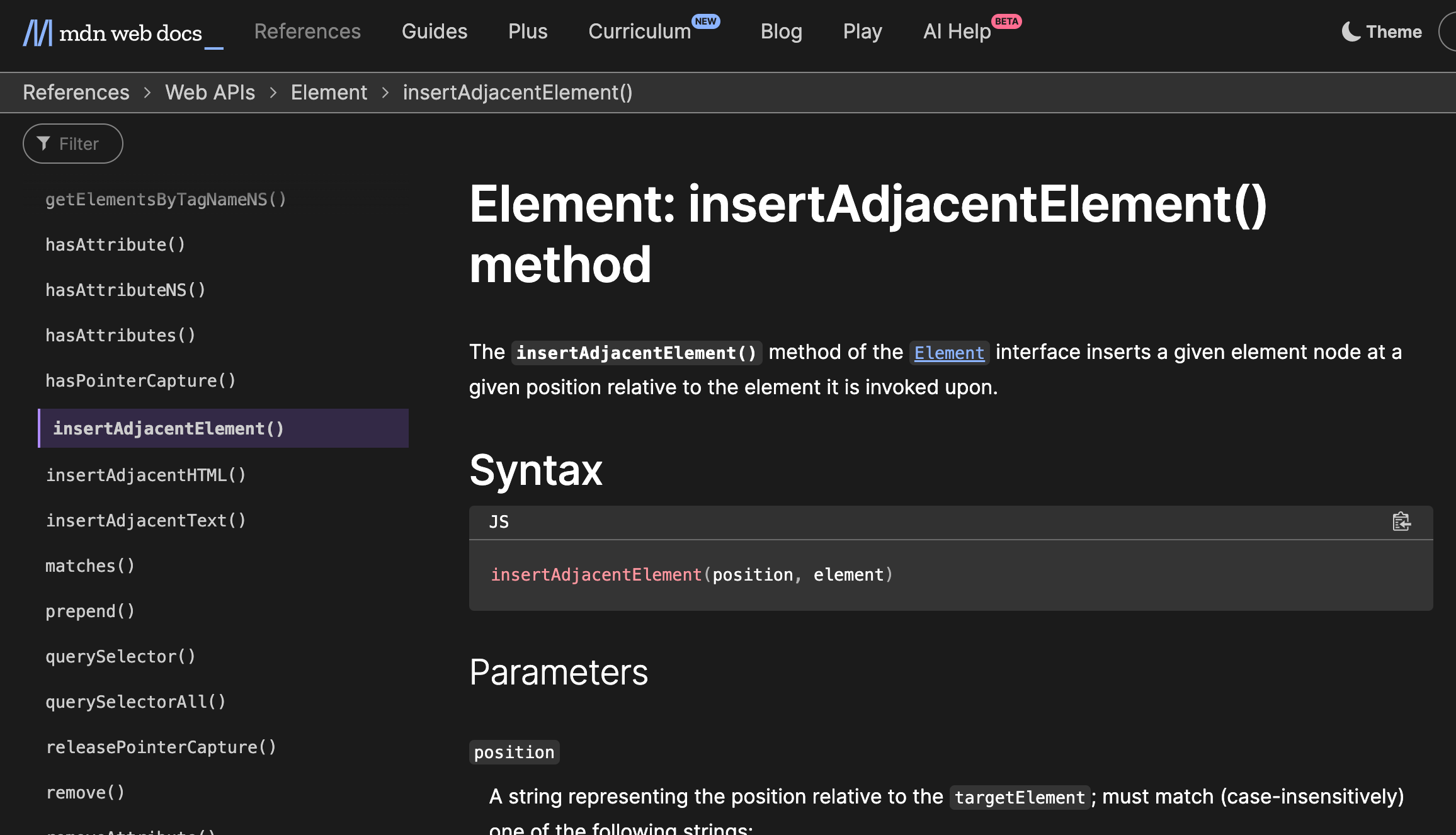

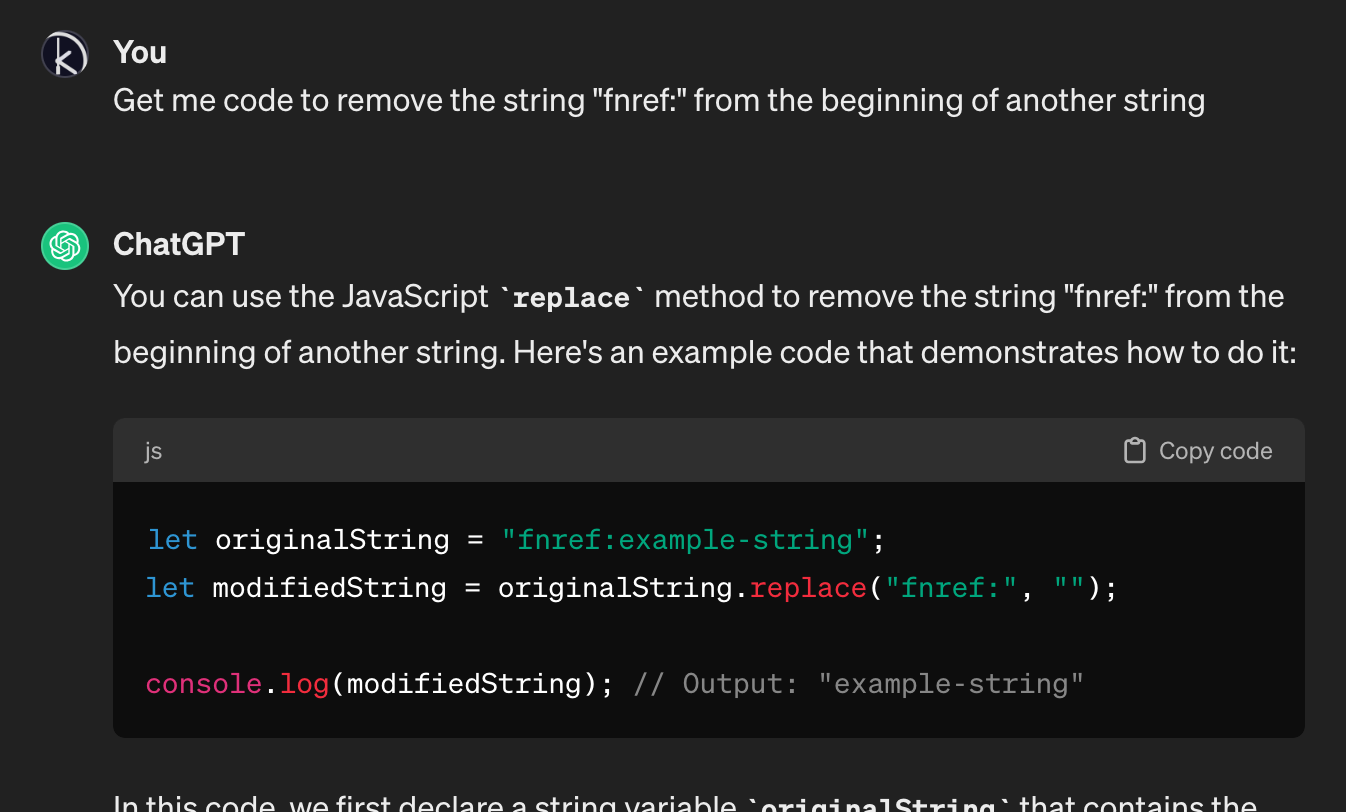

JavaScript Programming

Even for stuff I know reasonably well, asking the bot is often quicker than trawling through reference docs. For example, when programming, sometimes there’s something I want to do, but I don’t remember the API for it or know whether the API exists.

Yep, checks out.

Be slightly skeptical of the answers, especially if they don’t make sense after researching. Sometimes the AI doesn’t suggest the best answer right away, and it takes a few rounds of questioning.

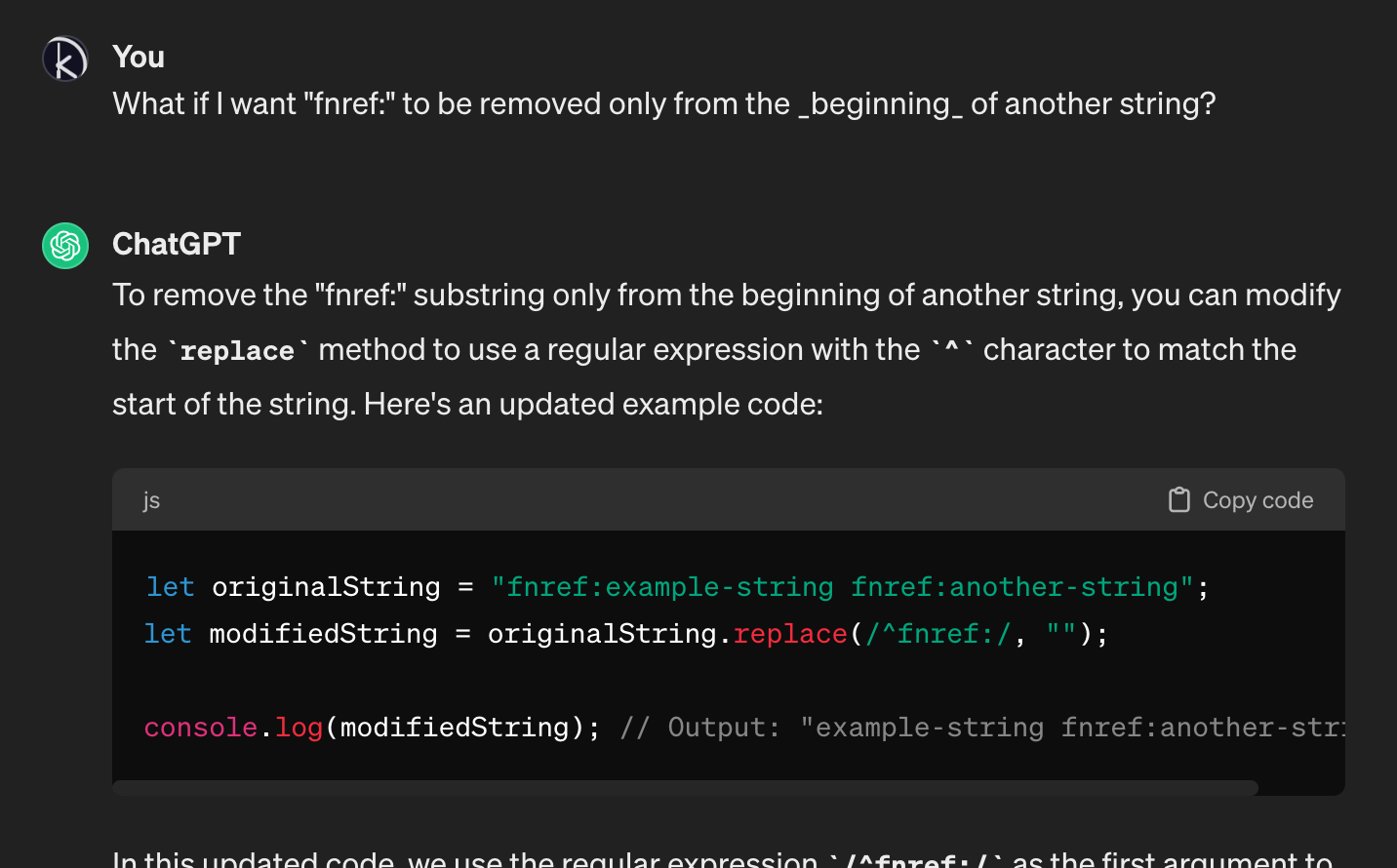

This isn’t quite right, because it would remove “fnref:” from anywhere in the string, not just the beginning of it.

This is what I actually ended up using.

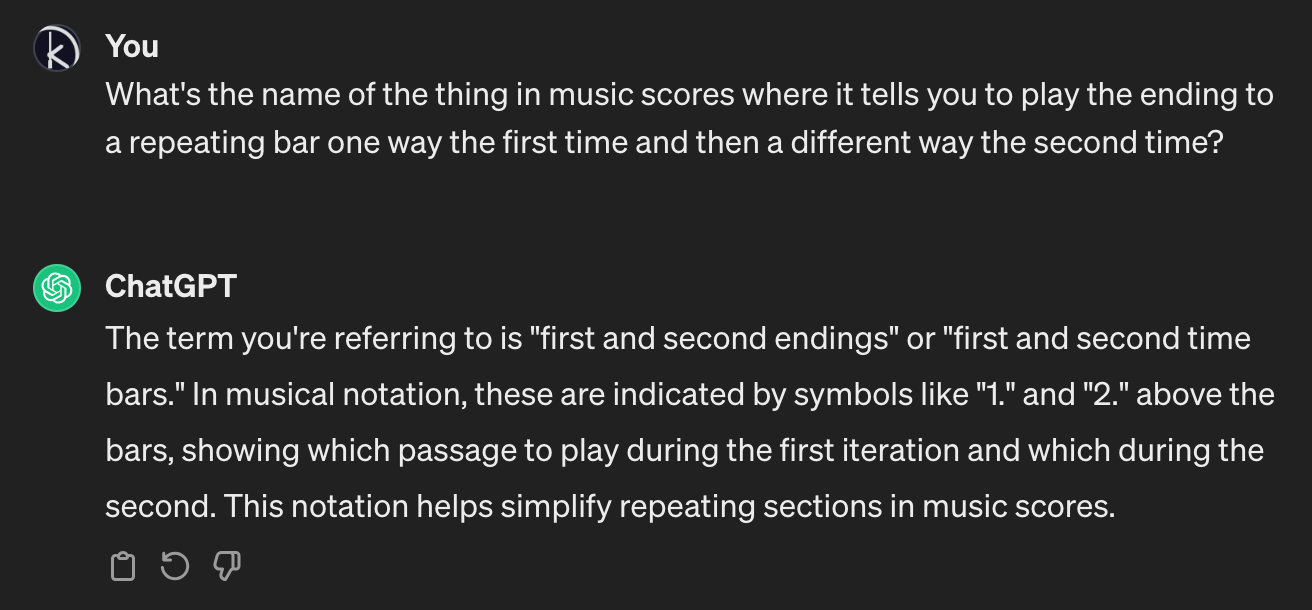

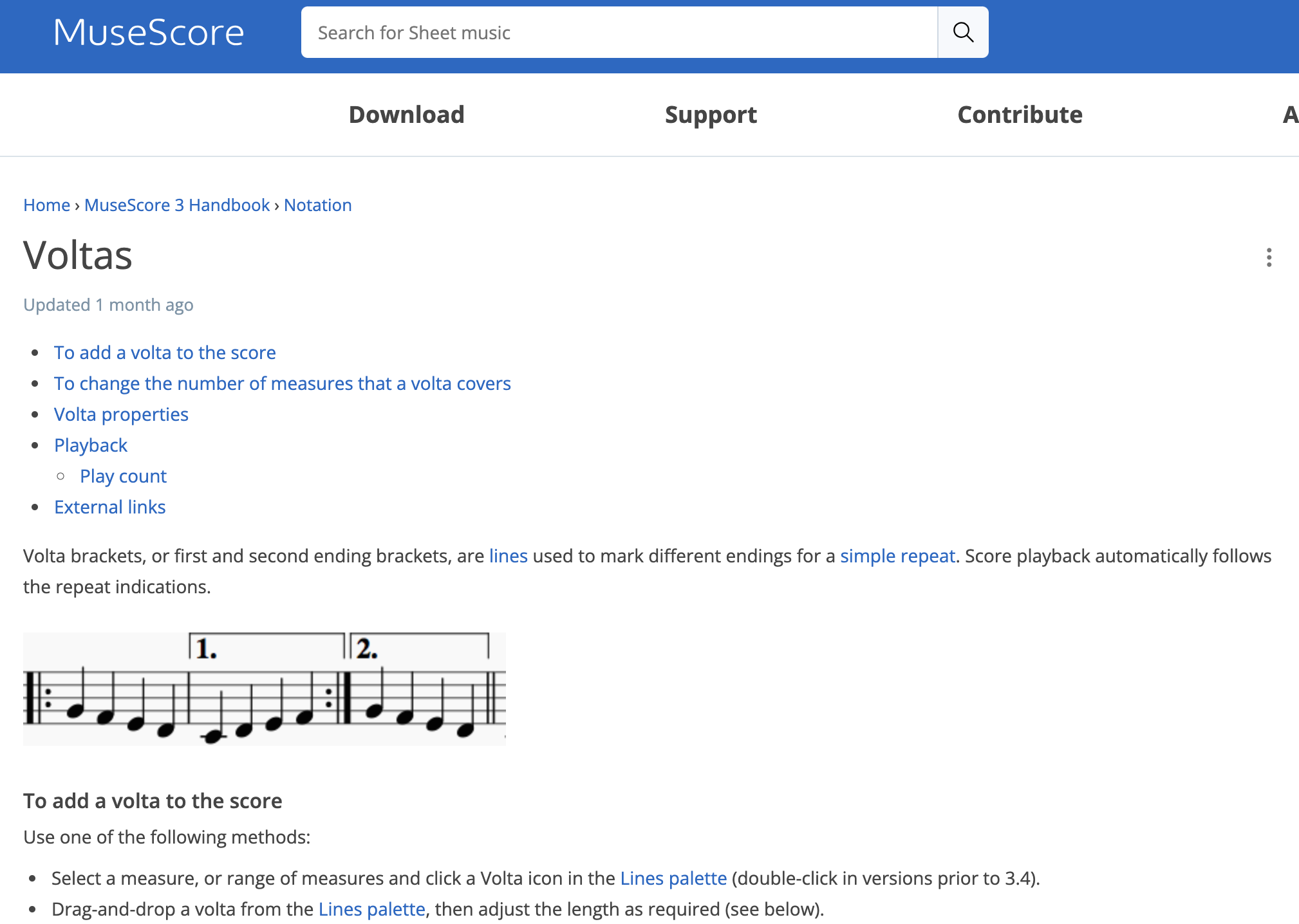

Music notation

It’s not just programming and math that it’s good for. Here’s me using it to remember a term from music notation:

This kind of wording for a question would be hell for a search engine to deal with.

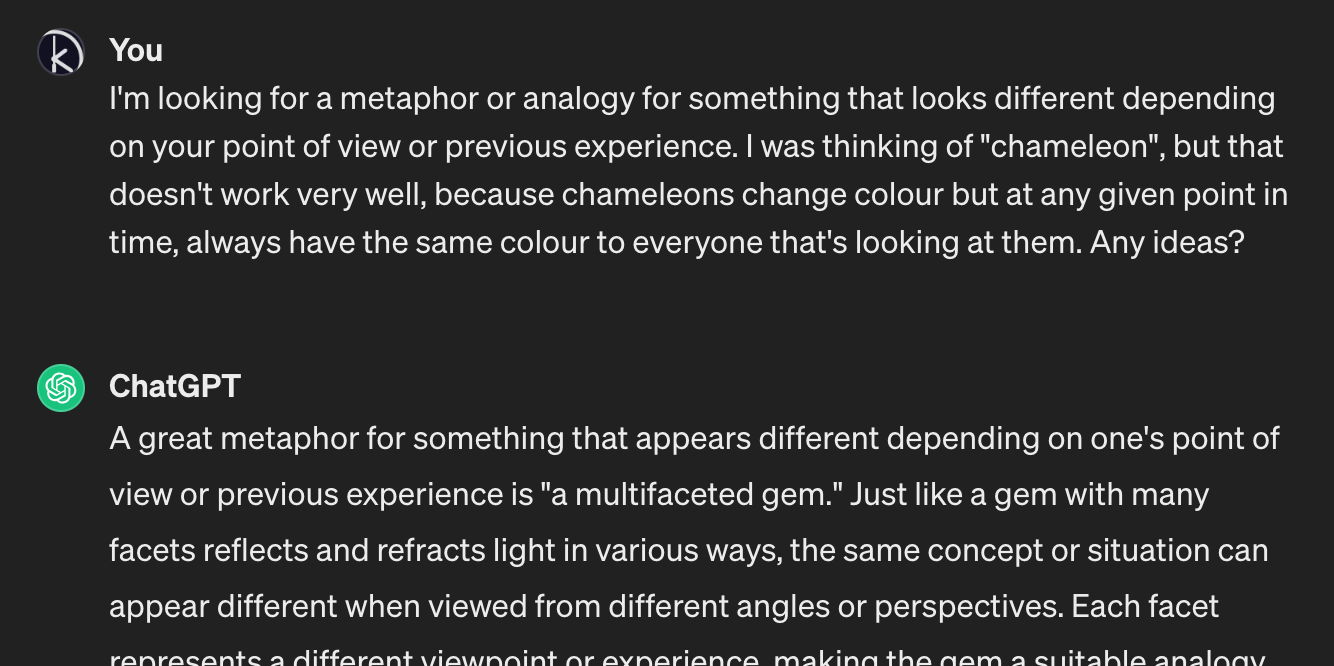

I specifically wanted it so that I could search for how to insert one of these in MuseScore 4, for a piece I was arranging.

I could have asked directly asked ChatGPT how to insert them, but I wouldn’t be confident in its answers: the free version of ChatGPT can’t search the web and I’m not confident in its knowledge of detailed MuseScore minutiae. So I used it as a jumping off point for my own search instead, which led me to MuseScore’s documentation.

And it turned out ChatGPT’s answer was incomplete, because the more technical term for this is a “volta”. It entirely neglected to mention this. Always double-check its answers!

Brainstorming and suggestions

Another useful case is when you want a variety of suggestions.

For example, when doing the statistical analysis I mentioned above, I was considering what tools to use. I told ChatGPT I was split between using Python or a spreadsheet in macOS’s Numbers, and asked it for some more suggestions and alternatives. It suggested all of the following and gave a short description of each:

- The R Programming Language

- Excel

- Google Sheets

- Jupyter Notebooks

- MATLAB

- Tableau

- SPSS

- SAS

I looked into some of these, and ended up picking Jupyter Notebooks. It was by far the best choice, giving me the readability of a written document, data visualization in the form of embedded plots, and the programmability and solid library support of Python.1 I would have wasted so much time if I had started out using Numbers only to realize that it was difficult or impossible to do complex statistical tests like ANCOVA.

Creative work

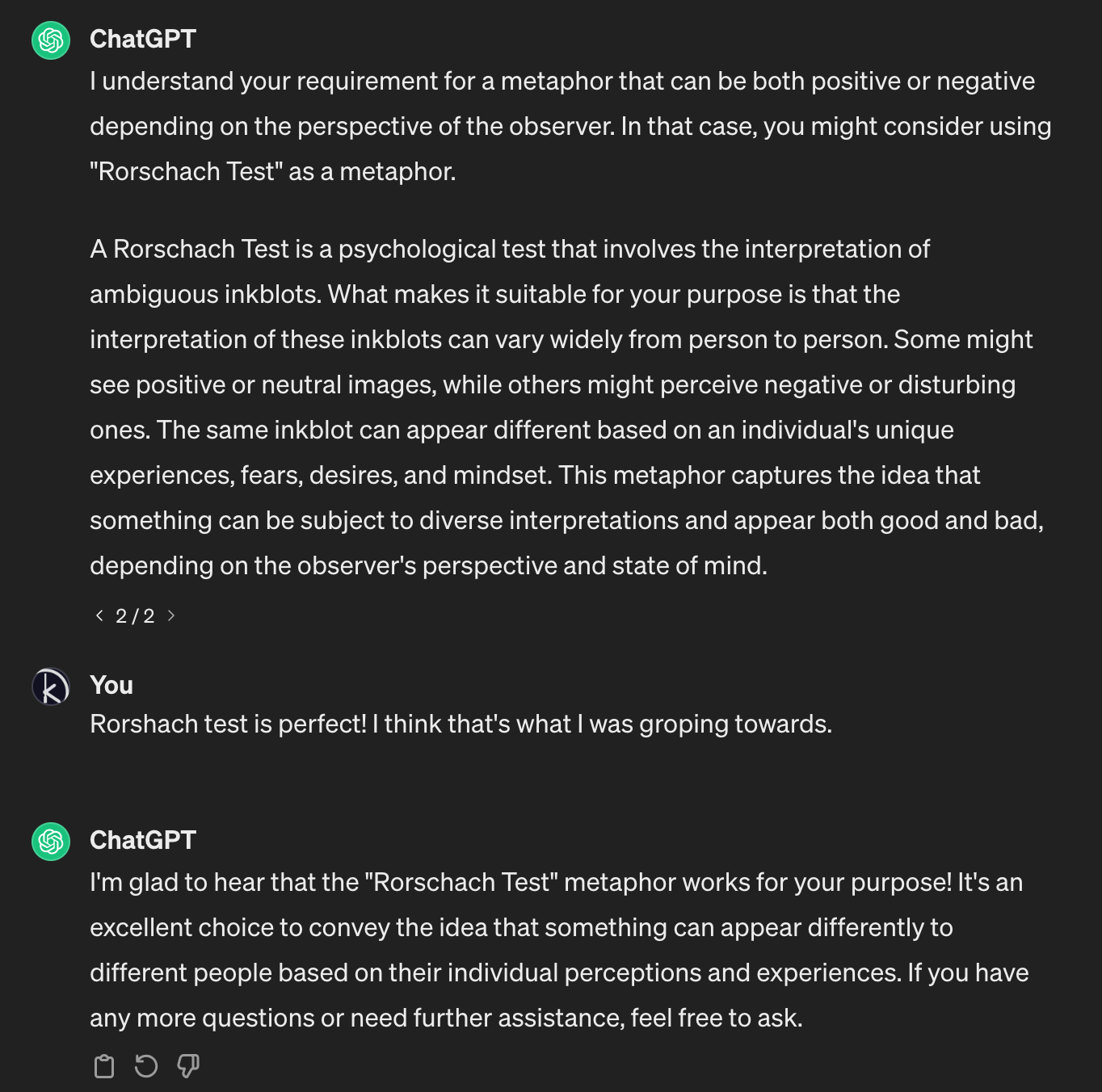

It can also help with more creative brainstorming. For example, when writing something online recently, I was reaching for a word to describe a specific concept, but couldn’t quite pin down a word or phrase for what I wanted to express. I decided to use ChatGPT as a sort of reverse dictionary:

That wasn’t quite what I was looking for, so I pressed it further, clarifying what I was looking for and asking it to give me more options. After a few more rounds of back-and-forth, it came up with this:

And it got my point across perfectly.

Know the strengths of your tools

The general lesson here is to know what your tools are good at, and to realize that the best part of your tools might not be the flashiest.

A lot of people look to generative AI and think that the generation part is the most interesting bit. To be fair, it’s definitely useful in a lot of circumstances. But just as important, if not moreso, is its ability to understand written text, even when the output is more constrained. It would be good to see more applications leveraging this.

-

Another big benefit was that it was free, unlike some of the other suggestions like Matlab or Tableau. ↩︎